A new feature in version 5.2.5 of BackupAssist is support for backing up to an Amazon S3 bucket via the service provided by s3rsync.com.

To set this up you will need

- a copy of BackupAssist

- an Amazon Web Services account

- an account with s3rsync.com

In your Amazon Web Services account, you will need to obtain your Access Key ID and generate a Secret Access Key. Then you will need to create an S3 bucket to use for your backups. See http://www.labnol.org/internet/tools/amazon-s3-simple-storage-service-guide/3889/ for a good introduction to these topics.

When you sign up for an s3rsync.com account, you will be provided with a username and a private SSH key file. You will need to save the SSH key file somewhere on the machine on which you wish to run BackupAssist.

Once you have performed these preliminary steps, you are ready to set up your job in BackupAssist.

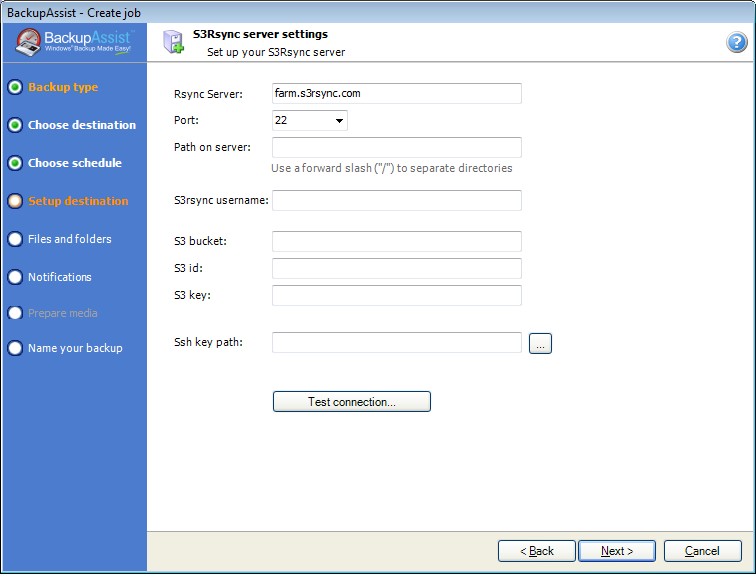

Create a new Rsync job and choose S3Rsync as the destination. If you want your job to run automatically each day, select the Mirror scheme. Next you will be presented with the S3Rsync server settings screen:

- Rsync Server: this should be farm.s3rsync.com (the default setting) unless you have been advised otherwise by s3rsync.com

- Port: this should be 22

- Path on server: you can leave this blank unless you want to set up multiple backup jobs using the same bucket (not recommended)

- S3rsync username: your username supplied by s3rsync.com (note: this is different to your Amazon username)

- S3 bucket: the name of the S3 bucket you created

- S3 id: your S3 Access Key ID

- S3 key: your S3 Secret Access Key

- Ssh key path: the location of the saved SSH key file provided by S3rsync.com

Once you have entered these details, click Next to select which files you want to back up and then complete the job setup.

17 thoughts on “Using BackupAssist for Rsync with Amazon S3”

This is a great walk through on setting it up.

I have two questions tho, and i have already asked support, but im sure others will ask too.

There is a 10gb limit on each bucket, how can you get around that if a client wasnts to backup 30gb of data?

I noticed today, that the file saves to the S3 servers is a SINGLE TAR file. So to restore a file, is one required to download the whole TAR file to restore data?

Hi Alan,

Thanks for your questions. There is no limit on the number of buckets you can use. If you wish to back up more than 10GB we suggest that you set up multiple BackupAssist jobs, with each individual job under the 10GB limit. Please refer to the following FAQs on the s3rsync.com website:

What are the storage limitations?

How can I bypass this limit?

BackupAssist 5.3, which we plan to release in August, will include a restore tool to provide an easy way to select and restore individual files from rsync backups.

In the mean time, it’s possible to restore individual files using the rsync command line tool. The s3rsync.com website has instructions for doing this. You can use the copy of rsync.exe which we ship with BackupAssist. It’s located in “C:\ProgramData\BackupAssist v5\temp\Rsync” on Vista and 2008, and “C:\Documents and Settings\All Users\Application Data\BackupAssist v5\temp\Rsync” on older versions of Windows.

David

David,

So strait from BA i would have the ability to resotre a file? I looked @ the Rsync command, and decided its easier to download the tar.

ATM, i can only access the S3 store and download the TAR file. While it works, having to download a large amount of un-needed data doesnt realy appeal (eps if its a large file)

Looking foward to 5.3, if you need a tester, look me up.

I really appeciate the backup to S3 feature. I am not 100% convinced about using a 3rd party service to backup to another 3rd party service.

It seems to be another layer in the backup process that could result in failed backups. Is there any way that BA could backup to S3 direct.

I notice too that S3Sync service charges per hour use which would presumably penalise users that have a slow internet connections.

Has anyone used the S3Sync service from Australia and confirm what sort of overhead to expect, what sort of transfer speeds on a stock standard Telstra ADSL2 port should I be expecting?

Damien,

I just uploaded 50mb in 16 min. This was my 1st seed, so it took its time, the next backup, will take about 5 min.

I am on a ADSL2 telstra link, and its fine.

After the inital seed, there isnt much data going up, i had a client i was testing it all with, and they were changing 30-60mb a day on a 15gb share (this was localy not to S3), but it just shows that after the seed, not much changes.

And as for the pricing of S3Sync, its cheap as anyway, $19 for 380 hours is nothing.

I too am not fully sold on the whole system, but im testing it in house. With the plugin for firefox to allow access into the buckts, it makes to easy. Once the new version of BA comes out, restoring files should be easier.

The only thing missing then is a scedual system, but im sure they are working on that for us 🙂

Hello all,

We’ve realized that we didn’t explain why we put this feature in – and that’s led to some confusion.

Just to avoid any confusion – BackupAssist is not affiliated with S3Rsync – we are not the same company, we don’t receive any commissions, and we are not endorsing their offering in any way.

Instead, our aim has always been to simplify configuration and setup, minimize mistakes and improve reliability. When we found that some of our users were using S3Rsync and this was causing some support load, we decided to enhance our software to make it easier to configure (thereby reducing user frustration and our support load at the same time).

We do have plans to support a range of Rsync destinations over the next 6-12 months, to provide everyone with a variety of hosting options – whether that’s self managed, or with one of our partners, or with a 3rd party.

S3Rsync falls into the 3rd party category 🙂

I hope this clears up any misunderstandings.

Regards,

Linus

Hi,

Thanks Alan for the info…

In my impatience I signed up for S3 Sync on Friday (I already had a Amazon S3 account so setup was pretty straight forward). I had been using the Firefox plugin too to manage buckets and upload data.

My backups seem to be averaging around half my upload capacity through Telstra, this roughly equates to around 200Mb per hour.

To Linus : Thanks for the explanation, my main concern is regardless of which 3rd parties involved there does seem to be a lot happening in between in order to accomplish a backup of this nature (eg, more places in which it can break!).

How can anyone really feel comfortable giving their Amazon S3 ID and Key to a Company that can’t even proof read their FAQ. What remote island is the S3rsync company located on anyway? Following are just a few examples:

. What’s wrong abut mounting my S3 bucket as local disk drive and then use rsync?

. S3rsync resolve this limitation and allows you

. What software my PC/Server need in order to connect to your Rsync server

. How the data is store on my Amazon S3 buckets?

. Any 3rd party tool that let you access your S3 data is good for this purpose

. For example to restore all the directory which start with “site” under /home/customers/

. Other alternative to bypass this limit describe in the next question.

. Are there any overhead?

And on and on….

I must say I would be hesitant to sign up for the S3rsync service myself. It is obviously a good idea, but their website leaves me a little worried as to what country my data is being sent through. I am all for supporting the little guys with big ideas, but who knows how long this service has been around and how long it will last.

Kudos to BackupAssist, though, for being progressive in finding new (and better) ways to backup our data. This software has gotten better each step of the way.

Hi Chris, I would like to clarify your concern regarding what country the data is being sent through.

Our solution architecture is built in such a way that the data is passed via our server in Amazon facilities in the USA and deleted from it immediately at the end of each user session.

In addition to that, you can also encrypt your data before submitting the process. For more info please follow this link:

http://www.s3rsync.com/index.php/FAQ#Is_it_secure.3F

Edward, thanks for your comments. We proofread and corrected the grammar according to our best understanding.

Daniela Vujinovic

Business Development

ActiveTech Ltd.

S3Rsync.com’s URL has failed. I need another way to run my backups to the Amazon S3 service. What do I do?

Hi Brett,

Thanks for letting us know of your situation.

Unfortunately (as suggested by Linus in an earlier comment) BackupAssist isn’t affiliated with Amazon S3Rsync and it sounds as if you’re having issues with their service.

My best advice is to contact Amazon S3 directly to find out what the situation is and any possible workaround which they have available for this downtime.

Alternatively you could configure a temporary backup for your data to another cloud provider (if you have one) or to a local drive to manually take offsite while you wait for your service to become available again.

Sorry we can’t provide any further information on this; however their service issue isn’t anything we’re able to resolve from our end.

Stuart

BackupAssist Support

Due to a critical domain administrative error, the domain was not renewed properly on time.

The problem is fixed and s3rsync service is up and running.

We apologize for any inconvenience.

Regards,

Daniela Vujinovic

Business Development

ActiveTech Ltd.

So when are you going to build native AmazonS3 API straight in to the product? I’ve managed to integrate it in to our Linux backup pretty easily. Surely it can’t be that hard to build it in and bypass the 3rd party service?

I too would like to know whether you are planning to build-in direct support for Amazon S3 rather than use a 3rd party. This should be a priority !

My s3 Rsync backup job was working for a few weeks, now after no changes it started failing, I can also no longer run through the test within backup assist for that specific job. It gives me a “test failed 225 ssh error”

Hi Hermann,

Have you tried to connect to your Rsync destination using SSH outside of BackupAssist to make sure that the destination hasn’t been changed in anyway to cause the errors?

Also make sure you’re running the latest version of BackupAssist and try re-registering the backup job with the Rsync destination.

If these suggestions don’t work, then I’d recommend that you raise a support ticket for further review.