(or why YOU should invest in server backups)

You never know what’s around the corner. Fire, flood or even good old fashioned malfunctions can bring your servers offline. Sometimes it’s a slog getting your boss to see this.

IT infrastructure sits in a bubble in the minds of some senior managers: never in need of replacement, invulnerable to damage and with no need for protection. Today you’re going to pop that bubble and we’d like to help.

We’ve got three real life stories of companies suffering from corruptions, accidents and angry ex-employees. Some of them get out alright, some of them, well, they aren’t around anymore. If you think your boss needs shocking into upgrading or, heaven forbid initially investing in backup, read on.

If you’re one of those whose boss has seen the light and recognizes the power of data and systems and their need for protection you could stop reading now but, well, you’d miss out on a great article and a decent serving of self-congratulation.

People sabotage things, like your business

JournalSpace was a small, intimate blogging platform that after 6 years of hard slog had clocked up over 16,000 active blogs as well as a highly engaged community. In 2008 they went offline.

Turns out the person running the IT had been thieving from the company and when he was dismissed he took revenge on the servers. The JournalSpace crew thought that their RAID would suffice as a backup but that was no match for their angry sysadmin. From JournalSpace via TechCrunch:

“It was the guy handling the IT (and, yes, the same guy who I caught stealing from the company, and who did a slash-and-burn on some servers on his way out) who made the choice to rely on RAID as the only backup mechanism for the SQL server. He had set up automated backups for the HTTP server which contains the PHP code, but, inscrutably, had no backup system in place for the SQL data.”

Ouch. When they found out that damage has been done they took the drives to a recovery specialist who told them they had been overwritten and were unrecoverable. The business collapsed in an instant. Done. Gone. Forever. All because they didn’t have a reliable, redundant, automated backup plan.

Buildings burn down, including servers inside them

At around 4 am on 17 April 2013, a water pipe in the Old County Building in Macomb County, Michigan, sprung a leak. The water eventually found its way to an electrical wire in the walls which in turn sparked a fire. At 10am smoke alarms started blaring and county workers rushed from their work stations. Around midday, some of the staff trudged back into the building only to find that they had no phone or internet access. Turns out that all of the County’s servers and switches were in the basement and had been destroyed by the fire.

The damage was so bad that the county had to issue a state of emergency that lasted for nearly as week as it struggled to operate without phones, IT systems or internet for its employees. The Treasurer’s Office, the Titles Office, even the County Court and Prosecutor’s office were left scrambling for their pens, notepads and carbon paper.

It doesn’t take much to imagine that if a large, well-resourced US county can be technologically thrust back to the 1950s by a simple electrical fire, what would happen if a similarly placed fire hit a small business. While it won’t bring back the physical stuff, a well-oiled offsite backup regime and disaster recovery plan can help bring a business back to life after a disaster like the one at Macomb County.

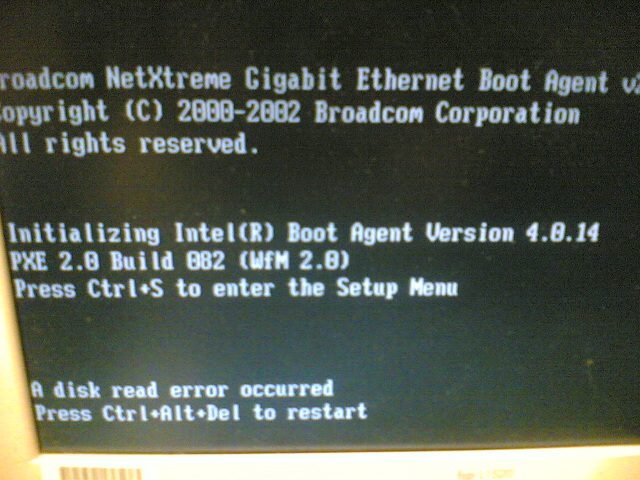

Servers just die

It’s no secret, servers die no matter how much time or effort we spend maintaining them. The secret is making sure what’s on them doesn’t die with them or require painful and expensive extraction via a data recovery service.

If your boss needs one last reason, take this close call from Spiceworks user AlanK who despite having a backup system in place, ended up losing a week’s worth of data and suffering some serious downtime due to dodgy backups.

“It took about 3 hours to restore the previous night’s tape up to the point where the program reported a data error on the tape. I tried the restore again with the same results. I reported to my boss that I’d have to restore a day back, so get ready to re-enter the previous day’s data.

“Unfortunately by the time everyone else was leaving, the previous night’s restore failed.

“On through the night I continued feeding tapes and having them fail. At 4:00 in the morning I had the previous Thursday’s tape and it was the last recent tape available. I knew that if the last tape failed that I’d be killed.

“On the 2nd try, the last tape — one week old — finally completed the restore. One entire week of business records had been lost and had to be manually recovered. The time? 7:45AM. I had spent 24 hours restoring tapes until I finally had a working system.

“The next day I placed a rush order for an entirely new backup system with a tape autochanger to ensure that tapes get cycled properly and a Grandfather-Father-Son rotation scheme. Finally, I instituted a plan to perform test restores periodically.”